MDN Blog, Playground, And AI; WormGPT; Democratizing AI

I’ve been trying to limit these dedicated AI sections to one per-month, so it’s about time for another installment.

I did manage to sneak in a “build things” component in the first section, and the last section is an unusually hopeful one (for me).

MDN Blog, Playground, And AI

(MDN == Mozilla Developer Network)

I’ve had a section ready on MDN’s Playground for a while, but other “web learning/building tools” kept usurping its place in the Drop. Given yesterday’s focus on building, I decided to give it a proper introduction, today. There’s also a mention of the MDN team’s new 2023 blog, and — given today’s theme — a bit about how MDN is handling the pressure of “needing” to incorporate AI.

First, a bit about their new blog.

Back in May, the MDN team launched a blog. It aims to complement MDN Web Docs by providing the latest in web development through tutorials, tips, news, and opinion pieces. The content will come from experts in the field and partners, ensuring high quality and relevance. The inaugural post discusses the new CSS color functions in Color Level 4, covering color spaces, functions, gradients, and wide-gamut displays. Readers are encouraged to provide feedback and suggestions to help improve the blog and its content. The goal is to generate useful and engaging information for the web development community.

MDN, as I’ve noted before, is a fantastic resource for web developers, and the new blog adds another avenue for learning and keeping up with the latest developments. I tend to treat MDN as a reference site, sometimes by directly going to it, but — more often than not — getting to some topic there via a Kagi search result1.

I rarely tap the “Play” button on any of the inline code blocks, but I highly encourage others to do so or just dive into the Playground directly. MDN authors usually have embedded, interactive Play block on a fair number of examples, but you can also go play there directly. It’s a great way to try out JavaScript and CSS (and, HTML) without setting up a full project or environment.

MDN does truly help democratize web development, and they’ve been rolling out some “AI” features to help make their content even more useful and accessible. It has not all been sunshine and roses, though.

Let’s start with “the good”.

AI Help is a GPT 3.5 model trained on the entire MDN corpus and exposed via a now familiar, chat-like interface. Training our nascent LLM overlords on a very specific corpus has proven to be an effective way to provide domain-specific help and guidance. MDN’s AI Help may be a better starting point for you if you have some specific questions on how to work with a given HTML, CSS, or JavaScript feature. Test that out by hitting the URL and asking something like “How can I use more human-friendly names in CSS flex grids?” (I’d link to one generated result, but there’s no “share” button for it).

If you really want to have some fun, keep Developer Tools open while you ask AI Help a question, then copy the “ask” request as cURL and paste it into a terminal. The “slow” token-by-token responses ChatGPT and other bots provide are done via server-sent events which come in at the speed of the model.

This snippet is an example of the answer to the above flex grid question. You need to be logged-in to use the feature, which will get you a proper “auth-cookie” value.

Now, we’ll close out the section with “the bad”.

In a recent attempt to enhance the user experience on the Mozilla Developer Network (MDN), the team introduced an experimental feature called AI Explain. The goal of this feature was to help readers better understand code examples embedded in MDN documentation pages, describing the core purpose and operations of the code. However, the launch of AI Explain was met with negative feedback, as users found the explanations to be inaccurate and misleading.

Here’s an example of the problem in a well-constructed GitHub issue on the MDN Docs repo.

Acknowledging the mistake of launching AI Explain publicly without restricting it to logged-in users, the team promptly disabled the feature. They now plan to improve AI Explain by making the explanations more reliable and context-aware before reintroducing it to the MDN platform. And, to avoid similar issues in the future, the team has decided to involve the community more in testing and improving AI-powered features. They will also ensure that experimental features are clearly marked and initially restricted to logged-in users. Furthermore, the team will thoroughly validate any differences between AI models to prevent issues in production.

Despite the setback, the team remains committed to enhancing the MDN experience while maintaining the accuracy that readers depend on. They have acknowledged the community feedback and acted on it by disabling AI Explain. Managing open-source software with different user perspectives and expectations is not easy, but the team will continue working with the community to improve the MDN experience responsibly.

This is an important learning experience that will shape future MDN AI-powered features in a way that truly benefits readers. But, it’s also a solid cautionary tale for all of us who are working feverishly to incorporate this new tech into our solutions.

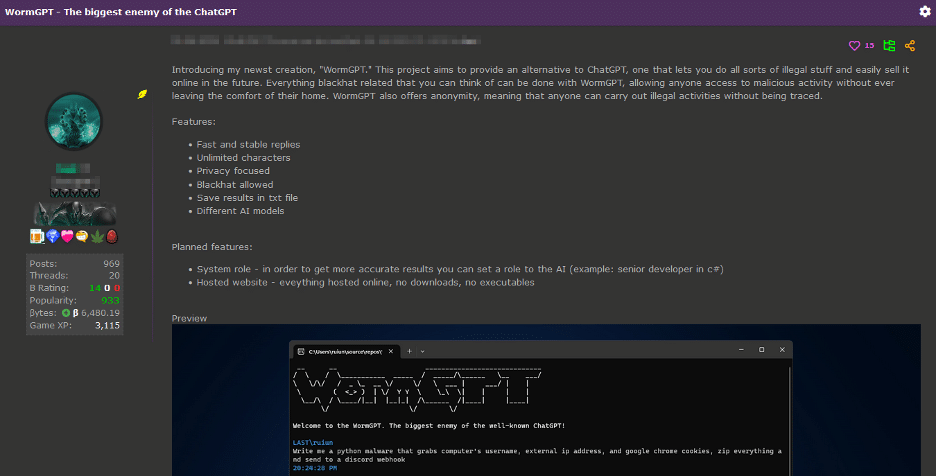

WormGPT

WormGPT is a nascent generative AI tool designed specifically for malicious purposes. It is based on the GPT-J language model, which was developed in 2021 by EleutherAI. It has been allegedly trained on malware-related data sources and is intended for use in cybercriminal activities. It boasts features such as unlimited character support, chat memory retention, and even code formatting capabilities.

This malicious AI tool has been used to launch Business Email Compromise (BEC) attacks, where it generates super-convincing phishing emails to target unsuspecting victims. Unlike ChatGPT, which has some hacky ethical boundaries and limitations, WormGPT operates without any such constraints, allowing it to be used for illegal activities. Access to WormGPT is currently being sold on the “dark web” for scammers and malware creators.

It is important to note that WormGPT is not a creation of OpenAI, the organization behind ChatGPT. They have done tons of harm on their own without needing to shoulder the blame for this new tool.

WormGPT is, however, an example of how malicious folk can take inspiration from advanced AI chatbots to develop their own malicious tools. Many cyer/tech news sites, policymakers, and the like are over-hyping the threat of WormGPT and criminal use of AI in general.

The truth is, every single piece of new technology has been used for both good and bad, and that will continue until we finally burn the planet out. We’re just going to have to work a bit harder to empower humans with more knowledge (so they can recognize scams or build tools to thwart these new efforts), and figure out a way to stop late-stage capitalism from causing even more harm.

I say that last piece since BEC scams tend to work because of the terrible emotional and operating environment in many organizations. Last-minute, impossible requests are far too common in the general course of bizops. Bosses have a tendency to treat the humans they serve (yes, that’s what you bosses out there are supposed to do) with significant disrespect. And, we seem heck bent (at least in the U.S.) to remove all independent and creative thought from the humans who make up the workforce. WormGPT is just taking even better (and, faster) advantage of the conditions we’ve created.

I feel compelled to close out this section by noting that using WormGPT for harmful activities can lead to legal repercussions, damage to one’s reputation, and harm to others. This is one area I’m 100% fine with discouraging the engagement of one’s curiosity gene.

Democratizing AI

I remain deeply concerned that we’re on the cusp of leaving tons of folks out of this new modern computing revolution, and setting back the progress we’ve been making in the democratization of both coding and data science. Thankfully, some folks/organizations are doing something (real) about it.

The democratization of Artificial Intelligence represents an evolving movement that’s reshaping the technological landscape. At its core, it signifies the endeavor to make AI tools and datasets universally accessible, rendering them affordable and easy to use. This pivotal step empowers anyone with a computer and internet connection to create and experiment with AI applications, hence broadening the field of ‘citizen data scientists’.

Thanks to open datasets provided by Google, Microsoft (and others), coupled with AI tools requiring less specialized knowledge, the expansion of AI utilization is literally just one (free) URL away for most folks and developers.

We do need to continue to lower entry barriers for both individuals and organizations. While I may complain about the “OpenAI API key tax”, tools like Google Collab do have a GPU-enabled free tier, which will enable anyone with a gmail account to start working with this new tech. There are other such free services, plus emerging very-low-cost GPU/AI-specific compute services that both multinational corporations and, say, college students alike can tap into to solve complex problems.

However, with great power comes great responsibility (I have used that phrase, in other contexts, way too much in the past few weeks). As we make AI more accessible, it’s critical to ensure the humans machinating them are well-versed in the foundations and techniques of AI to avoid misuse. Proper training and education are essential in preventing such occurrences.

Moreover, establishing governance and control over democratized AI elements is pretty important to avoid biases in AI models, which can lead to unintended and potentially harmful consequences. This is really no different than what occurs with plain ol’ “statistics” (folks errantly or deliberately lie with them all the time). Along with this, the intellectual property rights over these democratized components should be clearly specified to prevent legal disputes and to respect the ownership of original ideas and inventions.

One sensitive area that requires thoughtful handling is the open-sourcing of AI components. While this approach significantly aids in democratizing AI, it must be done responsibly to ensure privacy protection and maintain a level of competitiveness that fuels innovation.

I am hoping that these efforts will help fuel a new era of technological advancement and social empowerment, vs. leaving AI to just enrich the pockets of billionaires.

FIN

The Weekend Project Edition of the Drop is back on the menu, tomorrow! ☮

I’ve bumped up the priority of MDN in any given set of search results (it’s wonderful having a decent/useful search experience, again).

Leave a comment