Where Did the/my [Software Development] Long Tails Go? Meet NVIDIA’s AI Red Team; Scrollytelling Book Bans; Bash Quotes

The best-laid plans of mice and hrbrmstrs often go awry, and have done so in plenty today. We’ll (mostly) have to leave the teased pure-tech stuff to tomorrow’s WPE.

Where Did the/my [Software Development] Long Tails Go?

I have and do fancy myself a builder of what I’ll kindly self-describe as “esoteric” utilities and (not so much these days) R packages. While this section is most assuredly not about “me”, for every {hrbrthemes}, pewpew, and {waffle} in my GH (et al.) there are dozens of {arabia}s.

This thought crossed my mind after encountering two things this week:

a spiffy new (dev-mode) R package by

coolbutuseless— {carelesswhisper} — that seamlessly wraps the FOSS Whisper speech-to-text librarya year-ish old Substack post dubbed “Where Did the Long Tail Go?” by Ted Gioia.

First, a TL;DR for you on Gioia’s tome and why it + Mike’s R package birthed this section that is ultimately about what we choose to work on as coders/builders/creators. Don’t worry, we’ll quickly move past the “sales-y” focus in two ❡ summary.

The Long Tail concept is a business strategy that ostensibly allows companies to realize significant profits by selling low volumes of hard-to-find items to many customers, instead of only selling large volumes of a reduced number of popular items. The basic idea is that you can make a lot of coin selling to nigh infinitesimally tiny groups of consumers. This is because they are underserved, and although each cohort has only a few members, if you attract a lot of them it adds up to a meaningful opportunity. The idea centers around focusing on products and services with almost no customers, which have low distribution and production costs, yet are readily available for sale. Amazon is a “good” example of this, though their “Long Tail” is ultimately shored up by profits from them overcharging us for cloud services.

Long Tail concepts are applied across many areas, including online business, mass media, microfinance, user-driven innovation, knowledge management, and social network mechanisms, economic models, marketing, and IT Security threat hunting within a SOC (which is another reason Gioia’s post gelled with me). Theoretically, the total sales which come from the Long Tail can be in aggregate exceed sales from the top of the curve, and (again, theoretically) the biggest sellers account for less than 50% of total sales, thus making the tail of the distribution more important in aggregate than blockbuster sales.

Gioia does go on to shatter the myth of the Long Tail (in a successful business context), but ultimately suggests we need to care about the Long Tail for some good reasons, which I’m adapting to a tech/creative focus.

I used to hang quite a bit on the “R” section of StackOverflow, gravitating towards finding ways to help solve niche problems presented by OPs. The aforementioned {arabia} package is one such example. It lets researchers extract useful data from Lowrance1 marine track loggers. I will never use that package IRL. Most humans will never even know that package exists. But, it sure helped one scientist who really needed to get this data in R, and seems to have been useful for at least seven others. While I may never even come close to “saving the world”, that one R package could be a catalyst for helping some scientist save a species or unearth some new, important discovery.

Mike makes similar “cool but useless” R packages. I think I’ve mentioned this before, but Mike is one of my “heroes” in coder-land. He is as ebullient as Alex Kamal, incredibly talented, gets crazy ideas, and runs wildly well with them.

(Please excuse the forthcoming peppering of unstoppable Python rants.)

Multi-year corporate marketing attempts aside, R is and will be a niche language. It’s just too easy to poorly write terrible Python code that barely accomplishes some goal just well-enough to let folks move on to the next poorly written, terrible Python project. And, with {reticulate} one can lazily just rely on that Python bridge to leverage poorly written Python code from R. While this is one of R’s strengths, there is something advantageous to having native functionality in just R (even thanks to a compiled and wrapped library). It significantly reduces complexity and prevents us from committing acts of genocide when we run into the inevitable “can’t get the Python dependencies working” trouble that befalls all things Python.

I’ve been using the (Apple Silicon-optimized) Whisper executable to accomplish my infrequent speech-to-text needs, and had considered doing what Mike did when said library burst onto the scene, but just…didn’t2.

Then, I scrolled back through a few years of dev-mode and CRAN R package creations (I monitor the entire R ecosystem daily) and only saw a handfull of folks like Mike still pursuing esoteric niches. Now, I am very thankful there’s a fine group of humans tackling the challenges of contributing to the core R ecosystem and leveling up multilingual package translations, so there is some “Long Tail” work going on that is valuable and laudable.

But, we modern humans truly seem to be fully in “Short Tail” mode these days, chasing after ⭐️, signups, likes, subscribes, and 💰. It’s the jet fuel powering TikTok and IG, and turning otherwise possibly content, plain ol’ humans into wannabe influencers.

In developer land, I fear we (since I am 100% including myself in this chastisement) will be increasingly content to replace genuine innovation with: “Make sure you set your OpenAI key in the OPENAPI_API_KEY environment variable”, and leave the single scientist, desperate to get data out of a proprietary logger format too far behind.

I don’t have a “solve” for this, but I’m hoping to further reinvigorate the “coolbutuseless” side of me this year and would like to challenge anyone who manages to get to this ❡ to “be more like Mike” as well. Why? Well, I’ll let Gioia try to convince you…

We should nurture Long Tail endeavors because:

They create a more pluralistic, diverse, and multifaceted society

They remind us that not all riches are measured in monetary terms

They serve as a counterweight to group-think and narrow-mindedness

Genuine breakthroughs often start on the fringes before entering the mainstream

Our lives are genuinely more fulfilling in a society with more options rather than fewer

Those deliverables might not add up to a big, fat bank account. But they create a healthy culture. So, the Long Tail does have a role for us — but it should be built on our wisdom and generosity, not our business[/influencer] plans.

Meet NVIDIA’s AI Red Team

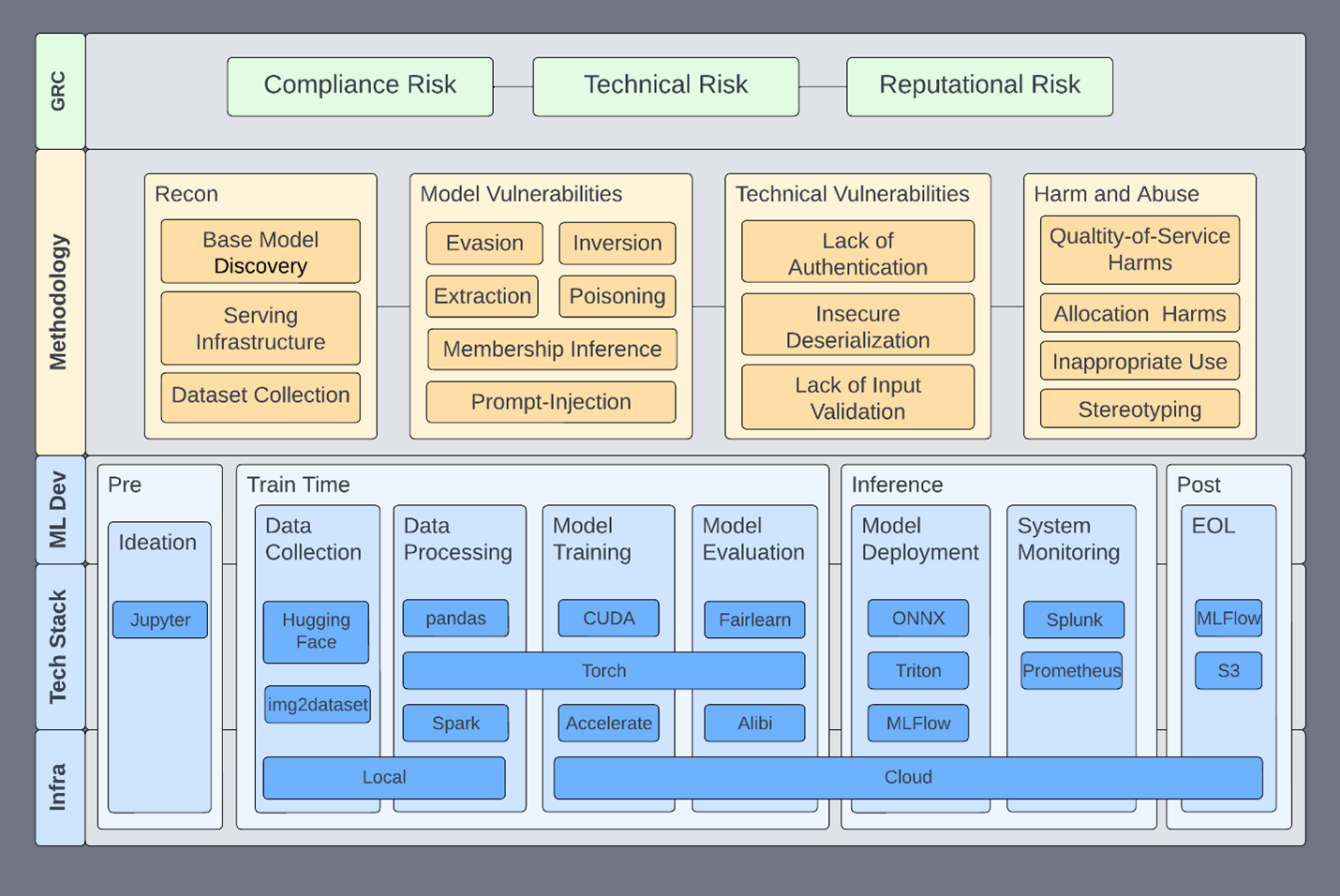

NVIDIA is using a technique known as “red teaming” to make sure its AI and machine learning (ML) systems are safe and sound. This method is getting more and more important these days, as “AI” is literally invading every aspect of our lives even as I type this sentence. This pervasiveness breeds new risks, and one way organizations sniff out risks is by a practice called “red teaming”, where clever humans try to find all failure modes of a particular tech or process, so they can be known and possibly fixed.

NVIDIA’s own AI red team is a rag tag fugitive fleet of spiffy security folks and data scientists. Their job is to find and deal with any issues that could threaten the safety of their ML systems. They’ve got a clear roadmap that keeps them focused on ML systems and addresses the threats that NVIDIA wants eradicated.

They zero in on particular problems in different areas of the ML workflow, infrastructure, or technologies. This way, they can point out what could go wrong in a given system. This also helps them single out, analyze, and explain any part of the system in relation to the whole.

One thing the AI red team emphasizes is the importance of governance, risk, and compliance (GRC). They make sure that the company’s security needs are understood and followed through. They keep an eye on risks that could harm tech performance, business reputation, or (shudders) compliance. Each of these risks gives a different view of the underlying system and can sometimes stack up.

An “assessment stage” is a critical component of their approach. It involves scouting out the landscape, looking for tech weaknesses, dealing with model vulnerabilities, and managing potential harm and abuse scenarios. These weaknesses often show up in research areas and might involve extraction, evasion, inversion, membership inference, and poisoning.

If you haven’t been developing your own internal “AI Red Team”, NVIDIA’s post provides an amazingly helpful blueprint for how to do so. If you do anything with online ML/AI systems at all, you will likely want to dig into the post and work with your organization to figure out how you can leverage such a team to help keep your organization safe and resilient.

Scrollytelling Book Bans

(We’ll keep this short given the length of today’s Drop.)

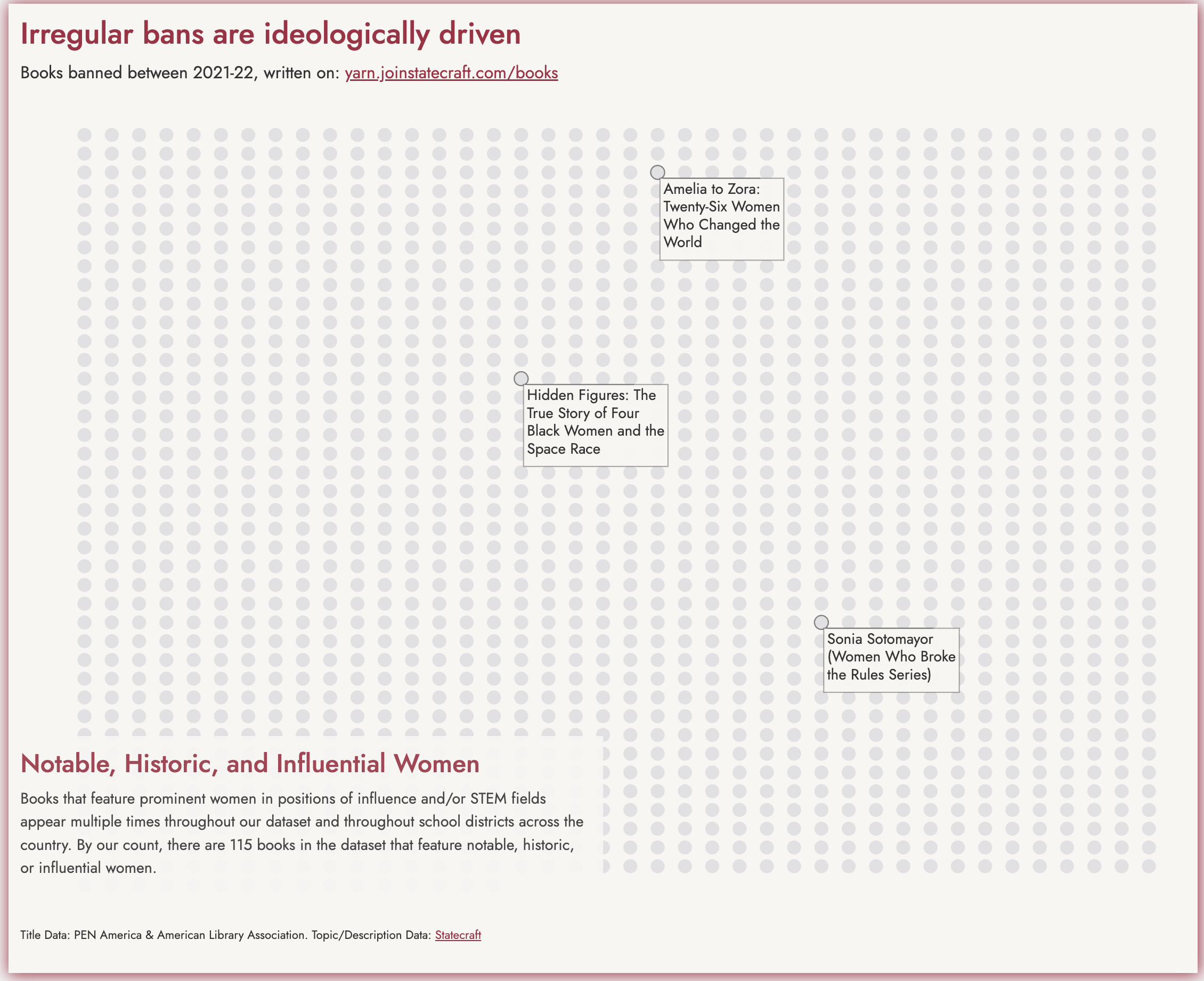

Statecraft “deliver[s] deep, visually striking insights about American policies directly to your inbox”, and Arman Madani — Statecraft’s founder — has a great scrollytelling piece on the 1,626 banned books (in 🇺🇸) of 2021-2022. Here’s the pitch from the post:

The non-profits PEN America and the American Library Association keep a catalog of banned books in the United States up to the 2021-22 academic year. In this 1 academic year alone, 1600+ books were irregularly banned from 138 school districts across America; 3.8 million students have lost access to information in varying degrees as a result. We’ve expanded the catalog of banned books by scraping open source data from publishers to give us the clearest possible look at common features of these 1,626 irregularly banned books.

The section header is shows one featured selection from the scrollytelling piece. It’s a solid example of data-driven and highly focused narrative. Plus, Arman provided all the data behind the project, so you can tell your own stories with it.

Bash Quotes

One of my $WORK team members noted, yesterday, this kind of scary problem regarding arbitrary code execution when using a certain method of getting an TLS certificate.

You should read that just to see how horrible the world of TLS certificates truly is.

I read the whole thread and learned something about Bash I really should have already known, but did not.

There are some cool things you can do with Bash shell parameter expansion, one of which is using the ${parameter:operator} expansion idiom.

The expansion is either a transformation of the value of the parameter or information about the parameter itself, depending on the value of the operator.

Each operator is a single letter, and the letter found in the GitHub issue thread is Q. The result of using it is a string that is the value of the parameter quoted in a format that can be reused as input. So, it helps you write safer Bash scripts that take in untrusted input.

You can try to two examples provided in the thread as proof in any Bash prompt:

var='inject $(echo arbitrary code)'; eval "echo \"$var\""var='inject $(echo arbitrary code)'; eval "echo ${var@Q}"Just a quick TIL I thought I’d pass on.

FIN

Whew. This was an unexpectedly long post, but hopefully at least one of the sections provided some either direct use or food for thought. ☮

…of Arabia; I’m here til Thursday (oh, today!). Try the veal and tip the waitstaff.

It’s not like I’ve stopped making “esoteric” things, as evidenced by a recent Golang DNS experiment.

Leave a comment