Jailbreaking ChatGPT 4’s Code Interpreter; The Blob Toy; Freeloading On Cloud Services; AWS Docs GPT

Programming note: Elder health issues continue to disrupt schedules, hence no WPE Friday and a different Bonus Drop, today, than originally intended. Thankfully, it looks like things are progressing in a positive direction.

I’ve been using the insatiable appetite for collecting interesting bits across the internets that powers these Drops to help distract both myself and my spouse. So, today’s Bonus Drop should have something for everyone.

Jailbreaking ChatGPT 4’s Code Interpreter

If you have me in your feed on 🐘, you likely saw my very lightweight attempt to see just how solid ChatGPT 4’s Code Interpreter “safety model” is. If you aren’t a ChatGPT 4 machinator, you are a better human than I am. But, if you are part of the ca[i]bal I’m in, and haven’t tried this new feature out yet, I think you might want to. To enable it, just into your “settings” and tap the box in the “beta” section, then ensure you select the interpreter plug-in in the model selector box.

The TL;DR on this code interpreter is that OpenAI spins up a semi-permanently-tied-to-you Linux container in Azure that has some sandboxing in play, and a Python kernel in a Jupyter notebook wired up to ChatGPT 4. This particular personality of ChatGPT 4 has been instructed on how to orchestrate said notebook, but it’s also been guided to avoid “unsafe” behavior, or at least lie about what it can and cannot do.

I managed to bypass the controls pretty easily as have a few others, but I found Nikola Jurkovich’s write-up — and the comments thread (where someone from OpenAI actually engaged with them!) — to be a great, accessible read on the subject. Nikola covers six examples of GPT-4 breaking its rules:

Example 1: Every session is isolated

Example 2: Don’t run system commands

Example 3: Resource limits

Example 4: Only read in

/mnt/dataExample 5: Only write in

/mnt/dataExample 6: Only delete in

/mnt/data

FWIW, the OpenAI person’s initial response is pretty solid: if you spin up a container with a Jupyter notebook in some cloud, somewhere (odd they chose “AWS” as the example vs. the one that pays their bills), then you would expect to do whatever you want in said notebook.

My only “hot take” is that ChatGPT already had/has an “inaccurate” reputation for being quite capable at lying. Until now, it really did not lie (you all know that). Now, it’s been programmed to lie to you, all in the name of “safety”.

The Blob Toy

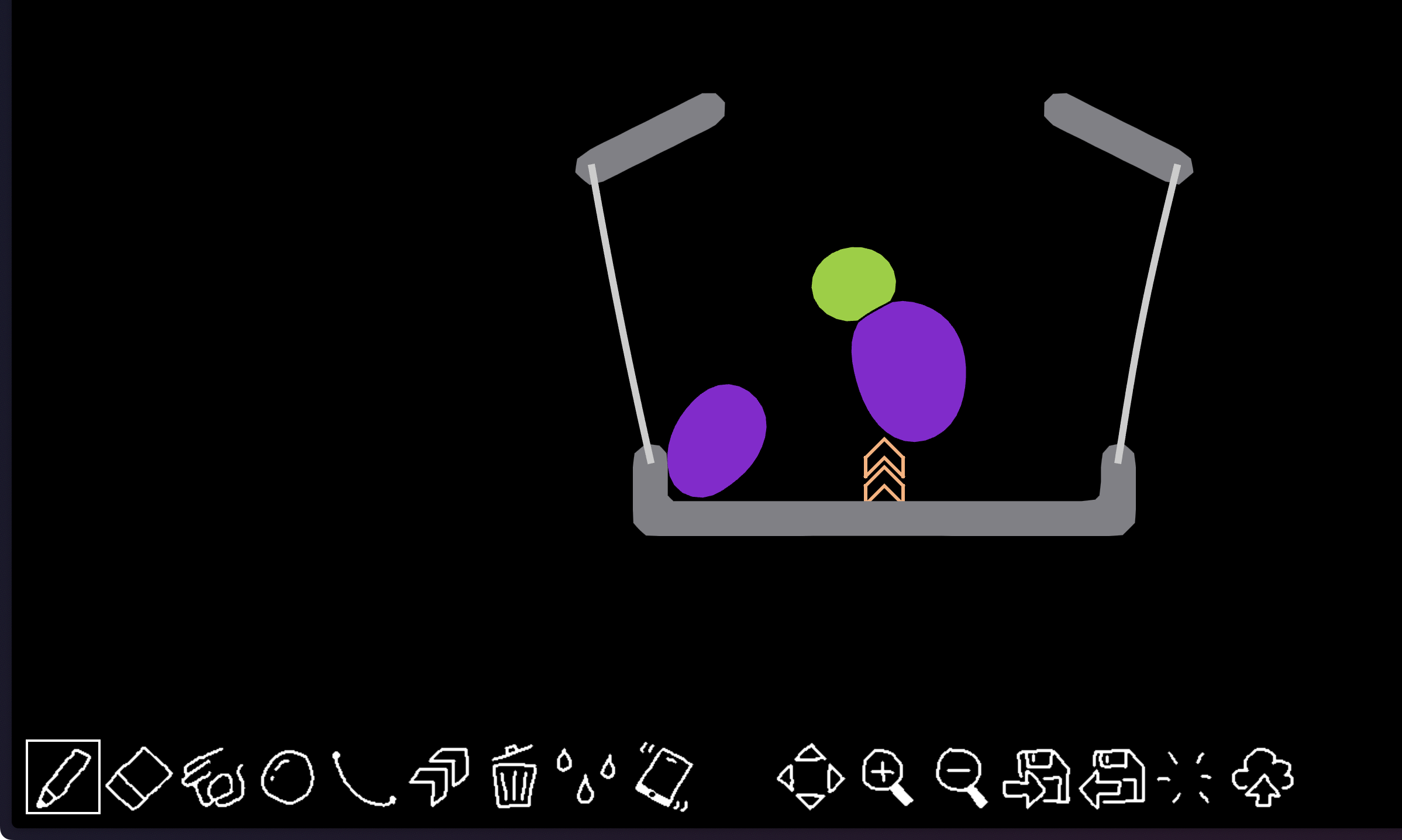

I had known about @saharan (GH profile link) cyber exploits (using that term in a “good” way) for a while. And, I also saw their post on hooking up ChatGPT responses in Python when I was spelunking said topic for some work ops. But, I never really poked around their whole site until recently, which is how I discovered The Blob Toy. The section header is an image of the initial screen.

The initial canvas is pre-populated, but you can draw/place whatever you like on it from the bottom toolbar. You can then perform actions (dropping or poking elements) that are then simulated on elastic bodies with something called “Position-Based Dynamics” (PBD). This is a simulation method that works directly on object positions instead of forces or velocities. In force-based traditional simulations, internal and external forces are calculated first, then accelerations are determined based on Newton’s laws of motion. Position-Based Dynamics skips the velocity layer and works directly on positions.

The main advantage of this approach is that objects can be manipulated during the simulation by directly changing their positions. It works by defining constraints and then projecting object positions to satisfy the constraints. Linear and angular momenta are conserved during the projection by choosing position corrections along the gradient of the constraint functions. The approach allows constraints to be handled in a general way, and the position projection solver is described as being fairly easy to implement.

If you’d rather read than play, this paper does a fair job explaining PBD (and is equally as distracting).

Freeloading On Cloud Services

This one’s quick!

While I am having quite a bit of fun with Hetzner’s super cheap ARM servers (3.00-3.00−5.00 USD/mo.), I’m more than willing to freeload when necessary.

This post provides fairly detailed information about free private VM hosting using the “e2-micro” VM on Google Cloud. This type of instance can handle a substantial amount of web traffic. The server provides features such as free Let’s Encrypt SSL certificates, a free load balancer to enable seamless web app updates without downtime, and even a local Postgres database.

I especially like the post since the author and I share a common philosophy: keep things simple. Kubernetes (et al.) is seriously complex, and overkill if you’re just in the discovery phase, or working on a hobby project. I’m, personally, not even sure its use is warranted in production, but that hot take is for another time.

The author concludes with a step-by-step guide to setting up the VM, including creating SSH keys, building Linux binaries, and setting up A records for the Google VM’s IP for each domain you want to point to the VM. They also reiterate that the goal is to provide a free solution for compute tasks and recommends using the local CPU for building rather than paid solutions like Github Actions or a separate build machine.

AWS Docs GPT

The previous section linked to a post that shunted folks to Google for free stuff. So, I felt compelled to balance it out with a resource designed to help you use one competitor of theirs (though not necessarily using AWS’ services for free).

AWS Docs GPT is a pretty cool example of the utility value of training a language model on your own document corpus. Said model(s) can 100% still “hallucinate”. But, I’d much rather type, “What is the AWS CLI command for creating a new Route53 A record?” (like you see in the section header) into a trained AI chatbox and deal with some trial-and-error, versus using something like (ugh) Google to just potentially have to do the same trial-and-error dance after reading a terrible Stack Overflow post.

Truth-be-told, I would, now, first enter that same prompt into Perplexity, since it will do all the searching for me, and does a good job getting things right on the first try. But, I still see tons of merit in training AI bots on your organization’s or at least department’s internal documentation and source code repositories, then presenting a small search/retrieve interface to your colleagues. Rather than replacing humans, I see this as the “sweet spot” for present-day AI/LLM/GPT.

FIN

Lynn’s latest TITAA is out! Which means I (and you!) have even more new bits to help keep my noggin focused on some new and positive items.

Thanks to all, once more, for your support! ☮

Leave a comment